Serverless Observability: Mastering Monitoring, Logging, and Tracing

In the dynamic world of serverless computing, understanding the health and performance of your applications is paramount. Unlike traditional monolithic applications, serverless architectures, with their distributed and ephemeral nature, pose unique challenges for operational visibility. This is where observability comes into play, providing the necessary insights to diagnose issues, optimize performance, and ensure reliability.

What is Serverless Observability?

Observability is more than just monitoring. While monitoring tells you if a system is working (e.g., CPU utilization, memory usage), observability helps you understand *why* it's working the way it is. It's about having enough data from your system to ask arbitrary questions about its internal state and derive meaningful answers.

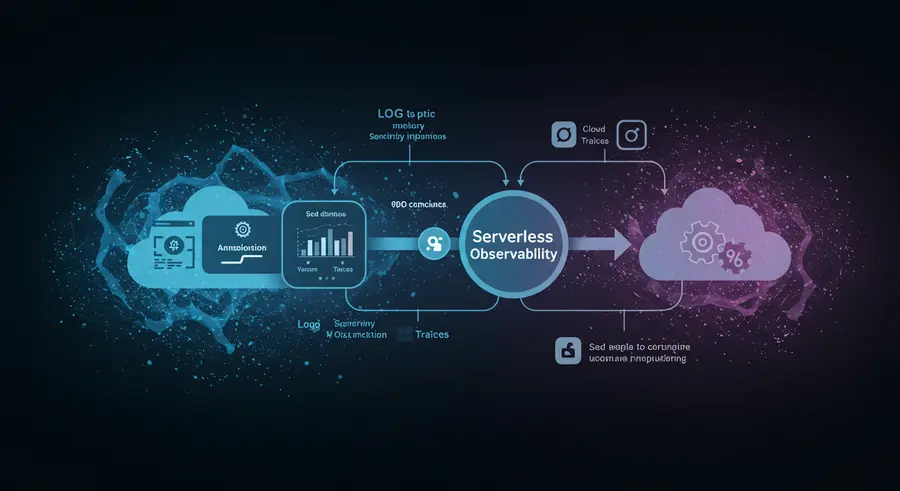

For serverless functions, this means collecting, analyzing, and acting upon three key pillars:

- Logs: Records of events that happen within your functions, providing context and details about execution flow, errors, and custom application-specific messages.

- Metrics: Numerical data points collected over time, representing the performance and health of your functions (e.g., invocations, errors, duration, throttles).

- Traces: End-to-end views of requests as they flow through multiple serverless functions and services, illustrating the dependencies and latency across distributed components.

The Challenges of Observability in Serverless

Serverless architectures, while offering immense benefits in scalability and cost-efficiency, introduce several challenges for traditional monitoring approaches:

- Distributed Nature: Applications are composed of many small, independent functions, making it hard to track a single request across multiple services.

- Ephemeral Functions: Functions are short-lived, executing only when needed, which complicates persistent monitoring and state management.

- Vendor Lock-in: Each cloud provider offers its own set of monitoring tools (e.g., AWS CloudWatch, Azure Application Insights, Google Cloud Operations Suite), requiring different approaches and integrations.

- Cold Starts: The initial latency when a function is invoked after a period of inactivity can affect performance metrics and complicate debugging.

- Cost Optimization: Monitoring tools themselves can incur costs, requiring careful configuration to balance visibility with expenditure.

Key Strategies for Effective Serverless Observability

1. Comprehensive Logging

Logs are your primary source of truth for understanding what your functions are doing. Best practices include:

- Structured Logging: Output logs in a structured format (e.g., JSON) to make them easily parsable and queryable by log management systems.

- Contextual Logging: Include correlation IDs (e.g., request IDs, trace IDs) in all log messages to link related events across different function invocations and services.

- Appropriate Logging Levels: Use different logging levels (DEBUG, INFO, WARN, ERROR, FATAL) to control the verbosity and prioritize critical information.

- Centralized Log Management: Send logs to a centralized service (e.g., AWS CloudWatch Logs, Azure Log Analytics, Google Cloud Logging, or third-party solutions like Datadog, Splunk, Elastic Stack) for aggregation, searching, and analysis.

2. Robust Metrics Collection

Metrics provide a quantifiable view of your system's performance and health:

- Standard Metrics: Monitor essential function metrics like invocations, errors, duration, throttles, and concurrent executions.

- Custom Metrics: Instrument your code to emit custom application-specific metrics (e.g., number of successful transactions, user sign-ups, API call latencies) that are critical to your business logic.

- Alerting: Set up alerts based on predefined thresholds for critical metrics to proactively identify and respond to issues (e.g., high error rates, long durations, unusual invocation patterns).

3. Distributed Tracing

Tracing is crucial for understanding the flow of requests in a distributed serverless environment:

- End-to-End Visibility: Trace requests as they traverse multiple functions, APIs, and databases to pinpoint performance bottlenecks or failures within the entire transaction.

- Standardized Tracing Protocols: Utilize open standards like OpenTelemetry or OpenTracing for consistent instrumentation across different services and languages.

- Integration with APM Tools: Leverage Application Performance Monitoring (APM) tools (e.g., AWS X-Ray, Azure Monitor, Google Cloud Trace, New Relic, Dynatrace) that provide visual representations of traces and help identify root causes.

Just as distributed tracing helps understand complex system interactions, AI-powered financial platforms provide insights into the complex interactions of market data, empowering users with advanced analytics and helping to identify patterns and opportunities in financial markets.

Tools and Services for Serverless Observability

Each major cloud provider offers native tools, and there's a thriving ecosystem of third-party solutions:

- AWS: CloudWatch (Logs, Metrics, Alarms), X-Ray (Tracing), Lambda Powertools (Logging, Tracing utilities).

- Azure: Application Insights (Monitoring, Logging, Tracing), Log Analytics (Log management), Azure Monitor (Metrics, Alerts).

- Google Cloud: Operations Suite (formerly Stackdriver) which includes Cloud Logging, Cloud Monitoring, and Cloud Trace.

- Third-Party Tools: Datadog, New Relic, Splunk, Elastic Stack (ELK), Dynatrace, Lumigo, Thundra, Sentry, Prometheus, Grafana. These often provide cross-cloud capabilities and advanced features.

Implementing Observability Best Practices

- Instrument Early and Often: Embed logging and tracing within your code from the outset of development, not as an afterthought.

- Automate Deployment: Include observability configurations (e.g., log groups, alarms, tracing settings) as part of your Infrastructure as Code (IaC) templates (e.g., AWS SAM, Serverless Framework, Terraform).

- Define Key Performance Indicators (KPIs): Identify what metrics truly matter for your application's success and reliability, and focus your monitoring efforts on them.

- Practice Chaos Engineering: Periodically introduce controlled failures to test your observability and alerting mechanisms.

- Educate Your Team: Ensure all developers understand the importance of observability and how to effectively use the tools provided.

Conclusion

Serverless observability is not a luxury; it's a necessity for building robust, performant, and maintainable serverless applications. By embracing comprehensive logging, robust metrics collection, and distributed tracing, developers and operations teams can gain unparalleled visibility into their serverless workloads, enabling faster debugging, proactive issue resolution, and continuous optimization. Invest in your observability strategy, and you'll unlock the full potential of serverless computing.